Virtual production using LED walls and motion-tracked cameras is one of the hottest techniques in filmmaking and visual effects. Much of the attention today rightly goes to Industrial Light and Magic with their giant LED wall virtual set and productions such as The Mandalorian. The production uses real film cameras to film performers acting in costume in front of a giant LED wall. A motion-tracking system follows the position, rotation, and lens adjustments so that the virtual, but hyper-realistic, background scenery can be rendered in real-time to simulate the change in camera viewpoint. A set of Vicon optical motion-tracking cameras surround the set and track reflective white marker spheres attached in rigid configurations fixed to each film camera. Whitman College’s new Immersive Stories Lab supports this new form of virtual movie production (though with projection screens in place of the massive LED wall).

Mandalorian motion-tracked film cameras – photo credit American Cinematographer 2/6/2020

One of the key technologies – motion-tracking for the virtual cameras – has its own history that fits within the broader history of motion capture (click here to link to the Motion Capture Society’s history). This page is a work in progress featuring historical highlights in motion-tracked virtual cameras with some connections to my own research work and creative building.

What are virtual cameras?

A virtual camera can provide any combination of these basic functionalities:

- display device which shows a real-time rendered view of a virtual 3D world or a composite of computer-generated elements and real-world video

- method for tracking the position and/or rotation a real-world movie camera and/or moveable display device

- method for aligning the computer-generated elements to match the perspective and lens properties of a real-world camera that is providing the real-world video

- method for real-time rendering of computer-generated visuals

- method for reporting changes made to either the virtual 3D or real-world camera lens properties such as focal length

- method to signal to start, pause, or stop recording camera movements

- method for scaling, rotating, or moving the virtual world motion tracking volume so that real-world movements of the camera device produce the desired scale of motion in the virtual world

How can virtual cameras help visual storytellers?

Virtual cameras make it possible for a human camera operator to move and turn a physical camera or display screen as if it were a regular film camera. The camera operator can intuitively and rapidly create the desired camera view or movement. Because the movements of the physical camera device are translated to corresponding movements of a virtual camera in a virtual 3D environment, the application software can adjust the scale so that one footstep in the real world traverses the length of a soccer field or produces a camera move that would be difficult, unsafe, or impossible in the real world. With a virtual camera, a filmmaker can instantly teleport their viewpoint to any point in the virtual world without having to physically move. Advances in Artificial Intelligence (AI) have the potential to accelerate workflows but require careful consideration of creative and ethical implications.

Historical Highlights in the Development of Virtual Cameras

1980s – Equations Create Camera Motion Paths

Before graphical interface 3D software existed, programmers would write computer code to evaluate math equations to control the position and look direction of the virtual camera. Jim Blinn produced animations to visualize the movement of space probes and planetary objects while working at the Jet Propulsion Laboratory. Click here to hear an interview with Jim Blinn and examples of this early work.

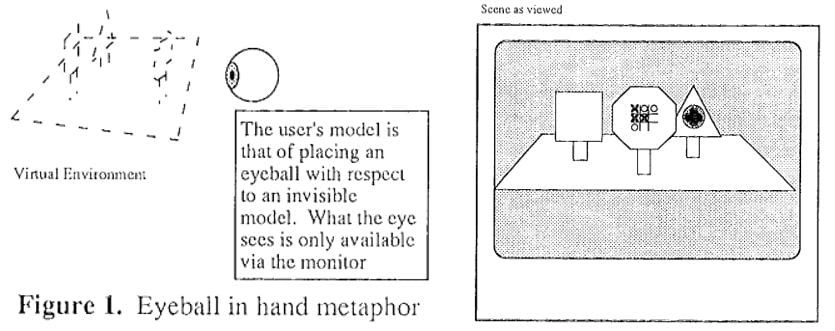

1990 – The Eyeball in Hand Metaphor – Colin Ware and Steven Osborne explored different metaphors for moving a virtual camera. The “eyeball in hand” metaphor had the user moving a Polhemus magnetic sensor that acted as a stand-in for a physical camera. Movements of the physical sensor translated into natural up, down, left, right, and turning movements of the virtual camera as if you were holding the virtual camera eyeball in your hand. In these experiments the magnetic sensor was not attached to a display screen. Research article.

Eye in hand camera control metaphor

1993 – Motion Tracking Sensor Attached to Hand-held Display Screen

One of the defining features of motion-tracked virtual cameras is to provide a means for motion sensing to track the position and/or orientation of a moveable display screen so that as the operator moves or turns the display screen, the virtual 3D camera view will change accordingly. In this experiment, an Ascension Technology magnetic position and orientation (6D) sensor on a wired cable was attached to a four-inch portable television. A Silicon Graphics workstation generated the real-time 3D graphics in response to camera position and orientation data from the motion tracker. A video camera filmed the screen of the SGI workstation and cabled this image to the hand-held screen. This image from the 1993 paper by Fitzmaurice, Zhai, and Chignell of University of Toronto shows the hand-held screen with the cable from the Ascension sensor. A push button was also attached to the top of the screen.

Four-inch portable TV with attached magnetic motion tracker and push button atop the screen.

1997 – Analog Dial Boxes

Early computer graphics workstations from Silicon Graphics and Sun used dial boxes, which provided a set of knobs that could adjust values within a range. For example, one knob could adjust the virtual camera pan rotation axis or the lens focal length.

SGI dial box control

1998 – Graphical User Interfaces Make Camera Moves

Writing mathematical equations and computer code were not the easiest way to create virtual camera motion. Early graphical user interfaces for creating animation such as Alias Wavefront, the precursor to Maya, provided mouse and keyboard controls to animate the virtual camera. Skip to the 23 minute mark of this Wavefront promotional video to see an example.

1998 – Constellation Wide-Range Tracking

The problem of tracking studio cameras over large movie sets was challenging to existing magnetic tracking systems due to loss of accuracy as magnetic signal strength diminishes by distance from the magnetic field source. Optical outside-in tracking systems required increasingly larger numbers of specialized cameras to track the movement of markers over a large movie sets. The concept of constellation tracking was to apply an inside-out approach where the tracking device “looked outward” to sense stationary landmarks to determine its position. This idea is analogous to navigating by looking at known stars in the sky. This method would be less expensive to implement since the multitude of landmark objects could be less expensive than specialized optical cameras. In the first version of constellation tracking used in the InterSense IS-600 and later IS-900, the constellation comprised a number of sonic emitters mounted at fixed locations overhead. The tracked device used microphones to measure distance and angles as shown in this figure from the 1998 SIGGRAPH paper. Click to access the InterSense whitepaper.

InterSense Constellation Tracking Diagram

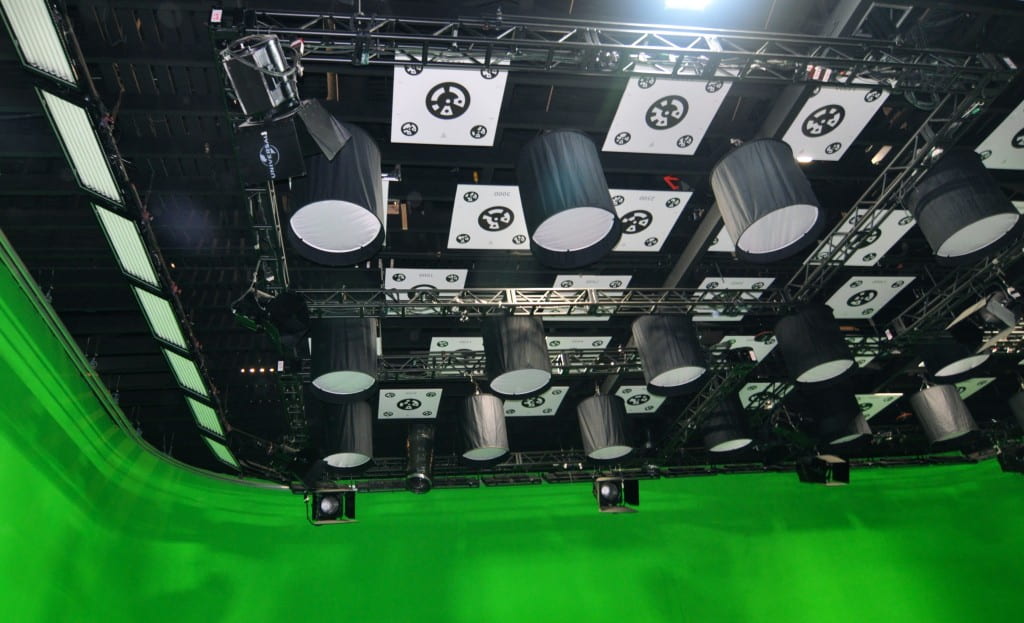

Later, the InterSense IS-1200 VisTracker substituted less costly printed fiducial markers for the sonic emitters and used a combination of computer vision camera to track the position of the camera relative to the constellation of fiducials. This image shows printed fiducials on a studio ceiling. The printed fiducials can also be attached to walls or floors.

InterSense tracking devices also include an inertial measurement unit (IMU) to combine orientation data from an inertial sensor with time-of-flight distance for redundancy and increased accuracy. One drawback of these first constellation systems was the installer had to precisely measure and record the position of each element of the constellation. For best results, InterSense recommended using a surveying instrument known as a total station. When pinning paper fiducials on overhead ceiling tiles, the installer could reduce the measuring work by using the known spacing of the ceiling tile grid. More recent implementations of the constellation tracking concept would solve this limitation.

1999 – HiBall Tracking – University of North Carolina at Chapel Hill

The HiBall tracking system is a variation on the inside-out constellation tracking concept that allowed for expansive tracking areas by adding the desired number of ceiling tiles distinguished by either a checker-board coloring or embedded LEDs. The HiBall device incorporates a set of six lenses to view the ceiling tiles to compute the position and orientation of the HiBall. This technology was commercialized by 3rdTech and was used in films such as Surf’s Up (2007). Click here to read the a PDF document that describes how this production used the HiBall tracker to create a hand-held look for this animated documentary of penguin surfers.

1999 Boom Chameleon (view only version at SIGGRAPH 1999)

A moveable display screen was mounted to a FakeSpace mechanical articulated arm that enabled a user to move and turn the display screen to adjust the position of a virtual camera. This configuration was demonstrated on the SIGGRAPH exhibit floor in 1999. This concept was extended in 2005 to add a touch screen and voice recording features to enable annotation of 3D designs. This photo is taken from the 2005 paper.

Boom Chameleon Virtual Camera Navigation for 3D Design

2003 – Handwheel pan, tilt, and roll controls for Polar Express

Handwheel controller for Polar Express

Handwheel controls like the ones used on real-world studio cameras were adapted to control the virtual camera for the movie Polar Express that was released in 2004. The virtual camera operator would turn one handwheel to pan the camera (left-to-right rotation), tilt the camera (up-down rotation), or roll the camera or move the camera position forward or backward along a virtual dolly track path. The team involved in the virtual camerawork included senior visual FX supervisor Ken Ralston and director Robert Zemeckis. Click here to watch a video from Sony Pictures Imageworks about the virtual camera work in Polar Express.

2003 – Lightcraft Prezion (need to confirm year of first product)

Lightcraft, founded by Eliot Mack, developed a realtime visual effects system that operates on set to film visual effects shots that integrate green-screen virtual sets and characters with live action performances. The system was used in the production of Tim Burton’s Alice in Wonderland (2010), V the Television series, and ABC’s Once Upon a Time series. The system tracks studio cameras over an expansive set using an overhead constellation of fiducial markers combined with a custom-built rig incorporating an InterSense IS-1200 VisTracker. Click here to watch behind the scenes of Once Upon a Time.

2005 – GameCaster virtual camera system for video game tournaments

GameCaster developed virtual camera controllers that combined a gyroscope sensor to pan and tilt the virtual camera while thumb-mounted analog joysticks provided change of position. Their software simulated a live multi-camera game sporting event, where the operators could cue live the most interesting viewpoint among the available virtual cameras. GameCaster filed a patent claiming invention of the motion-tracked virtual camera controller. The patent was initially granted (2005) but overturned in 2015 after Dreamworks requested a re-examination of the patent. This legal episode demonstrates how many great ideas build upon years of prior work and the challenge that patent examiners have in keeping up with rapidly evolving technology developments.

Video sample from Gamecaster’s Battlefield 2142 Invitational.

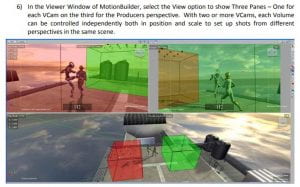

2008 – InterSense Introduces the IS-900 VCam Virtual Camera Tracking System

Motion-tracking company InterSense created one of the first commercial motion-tracked virtual camera systems for use in visual effects. InterSense had been working behind the scenes with filmmakers including Robert Zemekis to consult and gather feedback on what features would be desirable in a commercial virtual camera system. For the first VCam InterSense embedded one of their hybrid inertial-acoustic position and orientation trackers and digital and analog controls into a Panasonic camera body. Virtual filmmakers would use a plugin to Autodesk Motion Builder to create a realistic simulation of hand-held camera moves combined with buttons to start and stop recording and analog joysticks to adjust the position and size of the virtual camera motion tracking volume. The high entry price of $50K limited its adoption. Dean Wormell was one of the principal developers of the InterSense VCAM.

- InterSense VCam

The ability to dynamically adjust the position, size, and orientation of the virtual camera volume made it possible for the operator to switch between doing a long range fly-in of Golden Gate Bridge to smaller movements for close-up shots of Ginormica battling a robot on the bridge in Monsters Versus Aliens (2009). Click here to watch a behind-the-scenes video from Monsters Versus Aliens. The virtual camera work begins at about the 3 minute mark.

To do – Find examples of later films that varied the scale of the virtual to physical world mapping.

2009 – InterSense VCam Lite

InterSense produced a lower-cost version of the VCam based on their inertial sensor that tracked only the orientation while two analog joysticks provided a way to change the position of the virtual camera. The VCam Lite was designed to support a larger preview monitor and more flexible options for arranging the hand controls and components using industry-standard rod and clamp camera rig assemblies. I remember hanging out in the InterSense booth during SIGGRAPH 2009 chatting with Dean Wormell. This device was so amazing that I decided to build one for myself since I didn’t have the approximately $30K needed to buy one. I commissioned an electrical engineer friend to assemble a set of digital buttons and analog stick controls that could be plugged into the button board I2C controller that was an optional add-on to the InterSense InertiaCube3 inertial sensor. I wrote C++ software to read the orientation data, digital button data, and analog joystick data for use in my 3D animation programs. Dean Wormell shared the VCam user manual and Brian Calus of InterSense tech support helped me with interpreting the VCam analog stick and digital buttons.

- My Homebrew InterSense VCam Lite

- InterSense VCam Lite – homebuilt

2009 – Avatar’s virtual camera

Avatar released in December of 2009 brought the use of motion-tracked virtual cameras into the spotlight. InterSense provided the underlying digital buttons, analog joysticks, and Motion Builder plugin software that went into making the custom-built virtual camera rig for James Cameron. LightWave 3D with its InterSense VCam support was used to fly the virtual camera to scout a low-res realtime preview of the Avatar virtual set. Rob Powers, one of people involved in Avatar and later LightWave 3D, confirmed the InterSense connection in a Jan. 5, 2011 interview with CgChannel.

I’ve been working with InterSense for a few years. On Avatar, we were doing these explorations, these set discoveries – like a location scouting process, but virtual – and [InterSense used] the feedback we gave them to develop the VCam.

Avatar virtual camera rig photo taken at SIGGRAPH 2011 by Glen Vigus

Photo by Todd Lappin taken July 27, 2010 shared by Creative Commons License.

Avatar’s virtual camera rig

In demonstrations of the Avatar virtual camera you can see white marker spheres attached to the rig so it could be tracked by the same optical cameras that were used to track the motion-capture performers in the large production stage.

Amazing Demonstration of the virtual camera invented for AVATAR

Oct 21, 2010 All Games Network

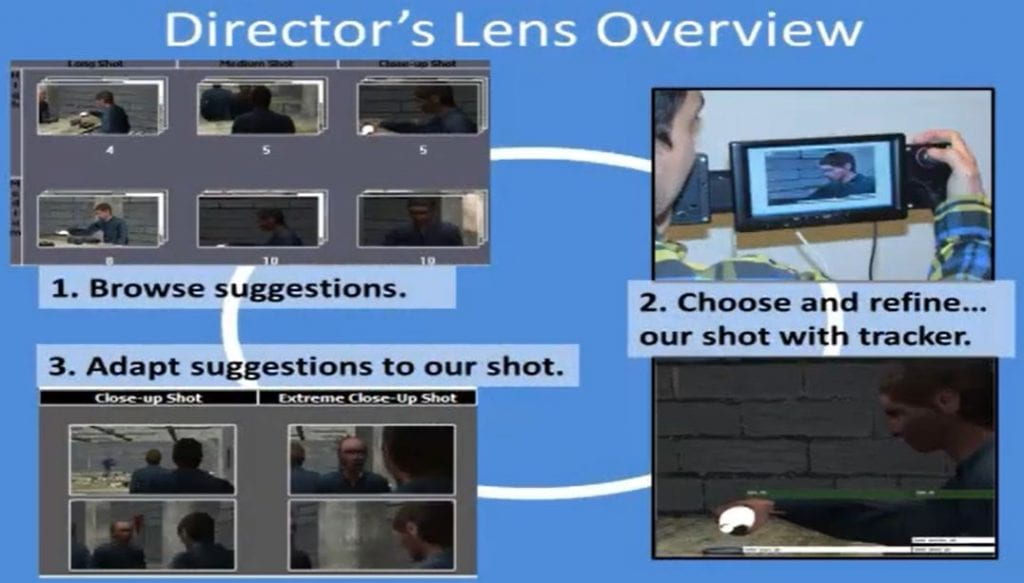

2011 – Intelligent Shot Composition and Editing Assist

For my year-long sabbatical I pitched an idea I had to research colleague Marc Christie at INRIA. The idea was to apply Artificial Intelligence (AI) algorithms to a hand-held motion-tracked camera rig so that that AI could learn the shot composition and editing style of its human operator. Each time the filmmaker recorded a shot, the AI would update a statistical model of when the operator cut from one type of shot to another. Over time, the system could upon request of the human operator, propose a variety of virtual camera shots that might next follow the current shot. The system was based on the human-in-the loop model of human-AI collaboration. The AI would do the virtual leg work of scouting out a variety of promising virtual camera viewpoints for filming the next shot. The human operator could control when the AI computed and presented a palette of suggested next virutal camera viewpoints. The palette of suggestions was arranged by various cinematic properties such as shot distance or angle.

Human-in-the-loop machine learning of cinematic editing

The Director’s Lens virtual camera rig had a pair of handgrips each with a set of digital buttons and an analog stick set on either side of a 7-inch LCD touch screen. The operator would see the AI suggested shots and could press the touch screen to instantly teleport the virtual camera into that shot. The operator could accept and record the shot as proposed, or enable the motion tracking to manually refine the shot.

This human-in-the loop workflow accelerated virtual filmmaking by combining the shooting and editing step into one step.

During the development of Director’s Lens, my coauthors and I had conversations with Dean Wormell of InterSense. Later at DigiPro 2012, I discussed this system with Joe Letteri (Avatar’s senior visual effects supervisor) and Sebastian Sylwan (CTO of WETA Digital). At the end of Joe Letteri’s Keynote talk, I asked Joe for his opinion about the idea of having an AI suggest camera angles to accelerate virtual movie scouting. I remember him saying that he would love to see it and try it, but that not everyone he knew would be comfortable with collaborating with an AI.

Read more about Director’s Lens here.

Video demo of the Director’s Lens

2017 StarTracker from Mo-Sys and RedSpy from Stype

These two independently developed camera tracking systems update the constellation tracking concept introduced by InterSense and the UNC HiBall. In this case, the constellation comprises a set of inexpensive passive reflective markers that can be attached to the studio ceiling or floor. The tracking device attached to the studio camera includes an inertial gyroscope and accelerometer along with an infrared light emitter. The infrared light reflects off the passive markers. These systems provided a way for installers to walk about the tracked space to calibrate the position of the constellation markers. Click here to watch the 2017 product announcement video from the National Association of Broadcasters Conference.

Click here to watch the the Mo-Sys StarTracker introduction video.

Do-it Yourself Virtual Camera Rigs

Up to this point virtual camera rigs that provided a set of conveniently placed digital buttons and analog sticks were either custom-built one-of-kinds or expensive commercial products. During 2011, I created several different forms of handgrip with an embedded 1/4-20 threaded mount point to make it easier to add a joystick controller to a virtual camera rig built from industry-standard rod and clamp fixtures. This made is possible to build a highly-configurable virtual camera rigs. Click here for more details on these homebrew virtual camera rigs.

home-built virtual camera rig using Wiimote controls and 7-inch tablet

Drexel alumn Girish Balakrishnan demonstrated the SmartVcs that used a Sony PS3 Move camera for tracking paired with a custom 3D-printed mount that fixed two Sony PS3 controllers alongside an iPad tablet. Girish created a Unity Game Engine software to play virtual 3D world scenes and record and edit virtual camera moves.