In this course, students applied concepts from intelligent affective agents, intelligent tutoring systems, natural language understanding, immersive games that used motion-tracking and immersive projections, Arduino robot pathfinding, and adaptive interfaces that enhanced accessibility using eye tracking, motion tracking, and speech input.

This class was the first to try out the new Immersive Stories Lab, which came online in time for building the final projects. Here are some highlights from the final project demos.

Follow Bot by Andrew and Cameron – An Arduino mobile robot outfitted with passive reflective marker dots drives to follow a target held by Ankit. Students software running on the motion-capture server computed movement commands that were transmitted by WiFi to the Arudino bot.

- Robot seeks a target

- Compute motion path from tracked position of robot to target markers

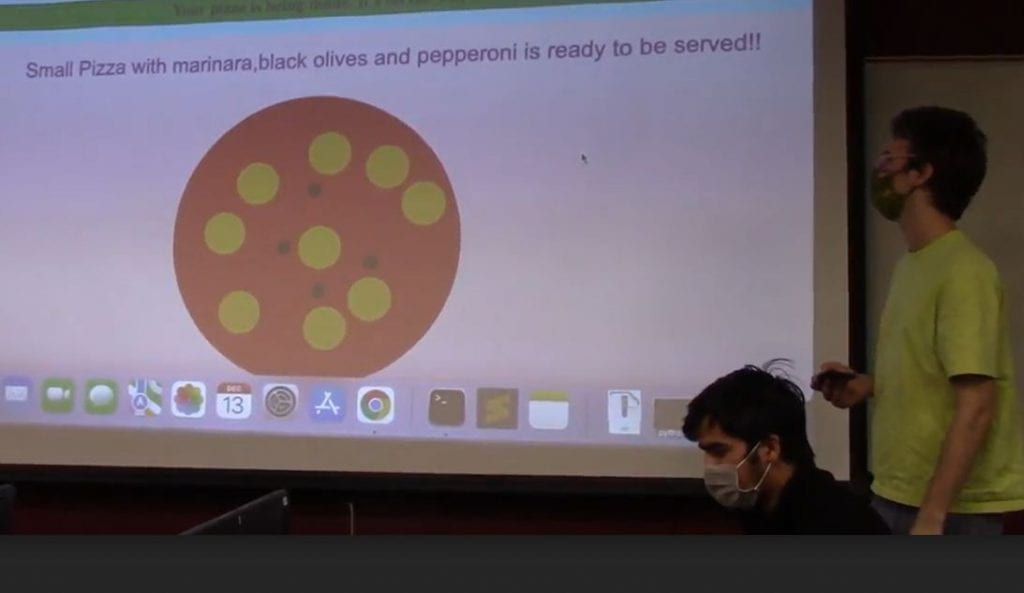

Voice Pizza by Ankit and Buddy – Speech is converted to text and processed by Python natural language understanding software. Let’s make a small pizza with marinara, black olives, and pepperoni!

Ordering Pizzas by Voice

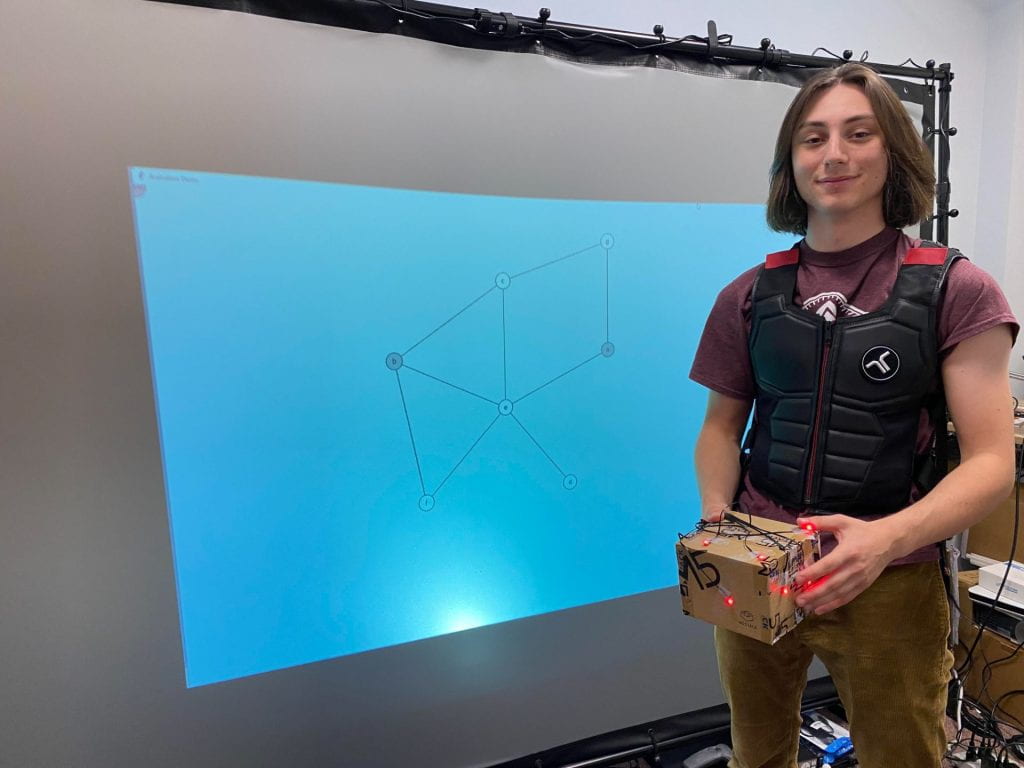

Haptic Navigation Aid with Motion Tracking

Prototyping a haptic navigation aid with computed pathfinding and position tracking by a PhaseSpace active-LED system.

Prototyping a haptic navigation aid with computed pathfinding and position tracking by a PhaseSpace active-LED system.